B-roll of exterior of HKS building, Salimah Samji teaching in front of an Executive Education class, Matt Andrews teaching in Sri Lanka, a group of Sri Lanka participants huddled in a circle working as a team, a woman pointing to a white board with the PDIA process, children in school smiling, children in Africa visiting the doctor, flags from all over the world blowing in the wind

Our Work

Building State Capability (BSC) empowers people to find context appropriate solutions to their problems, thus improving the implementation of their policies and programs. We build organizational capability by delivering results.

Where We Work

We have trained and engaged with over 4,000 practitioners in 150 countries.

Click/tap on the countries below to see related content (opens a new page).

Our Projects

We convene implementation teams who work iteratively and autonomously to solve their own nominated problems. The teams learn new problem solving tools and achieve results as well as tangible capacity gains.

SEPTEMBER – DECEMBER 2022

PDIA for Education Systems

BSC developed a custom PDIA for Education Systems online action learning program funded by United Kingdom’s Foreign Commonwealth and Development Office (FCDO) to help build the capability to improve foundational learning outcomes through practical action-oriented work.

SEPTEMBER – DECEMBER 2022

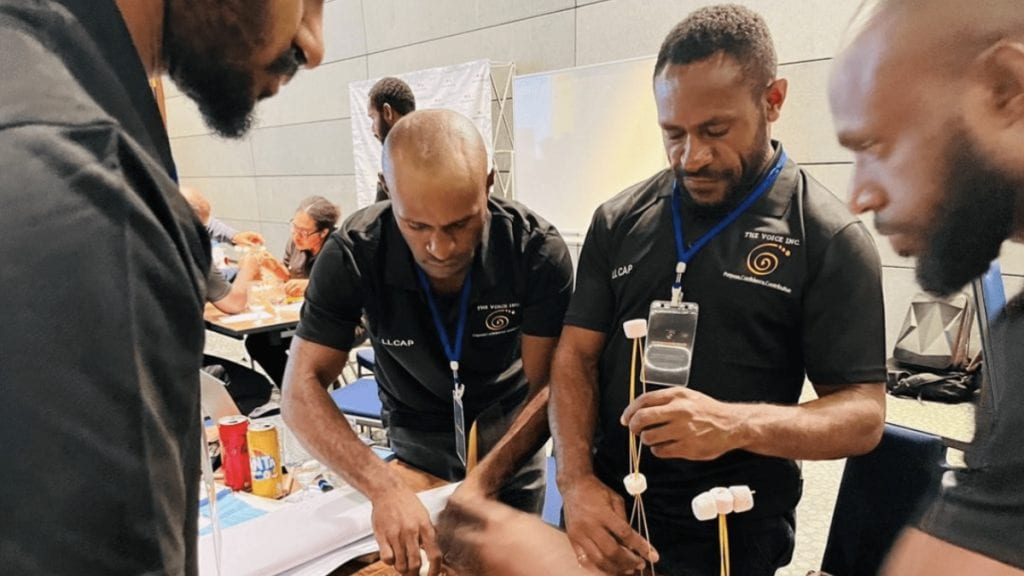

Building Local Leadership and Collective Action in Papua New Guinea

BSC led a virtual PDIA action learning program for The Voice Inc, a Department of Foreign Affairs and Trade (DFAT) funded program, to build the capability of local leaders to create broad coalitions for change.

Our Tools

We have a wide variety of tools and resources available to learn about the Problem Driven Iterative Adaptation (PDIA) approach and our work.

PDIA Toolkit

A DIY toolkit of the PDIA approach, designed to guide you through the process of solving complex problems which requires working in teams.

Audio & Video Guides

These self-paced guides link to blogs and podcasts where you can learn about the PDIA process, the 4P model for strategic leadership, leadership in crisis, and more.

Publications

We regularly publish research in a wide range of academic and policy venues, include working papers, books, book chapters, articles, blogs, and reports.

From Our Blog

We have over 700 blog posts written by practitioners and policymakers on their experience using PDIA.

Engage with Us

We offer training programs and host events featuring researchers, practitioners, policymakers, and academics who are working to solve complex problems.

Training Programs

Based at Harvard Kennedy School, BSC trains and equips practitioners and policymakers around the world with the tools and resources to solve public problems. Learn more about our Executive Education programs, Degree programs, and Online Programs.

Event Highlight

Reflecting on a Decade of Building State Capability around the World

October 19, 2023 | Harvard Kennedy School

Over the past decade, Building State Capability (BSC) has engaged with over 3,500 practitioners in 148 countries. In this session, the BSC team discusses the evolution of PDIA and its pedagogical methods, as well as shares reflections on their journey of building capability and igniting change. It also features voices of global practitioners who have used BSC’s tools and approaches.

Subscribe to BSC

Sign up for our research newsletter to learn more about our team’s latest findings, papers, projects, podcasts, and more.

“Every country has its own red tape and hoops. It may take you years to change a tiny thing, increase efficiency, to implement innovation. But if you believe in your purpose and in what you’re doing, you have to be patient…learn PDIA because it will serve you as a North Star.”

Urkhan Seyidov

Implementing Public Policy 2019